Prison Population Register-Index (1938-1955)

Automatic probabilistic indexing (PrIx) of the Prison Population Register-Index (1938-1955), a historical dataset containing more than 500,000 images of prisoner records. This project enables efficient textual searches within digitized images of these records by leveraging PrIx. Unlike Handwritten Text Recognition (HTR), which often struggles with accuracy in degraded or complex documents, PrIx offers a more flexible and robust approach to information retrieval.

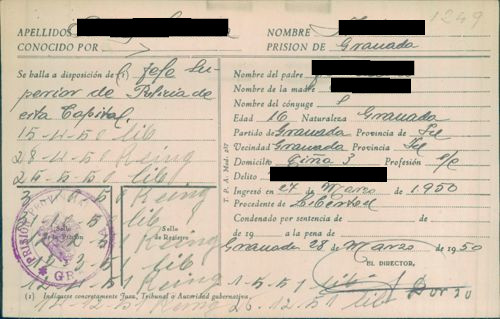

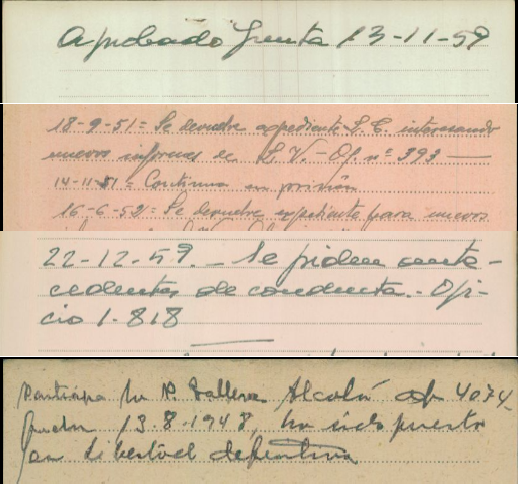

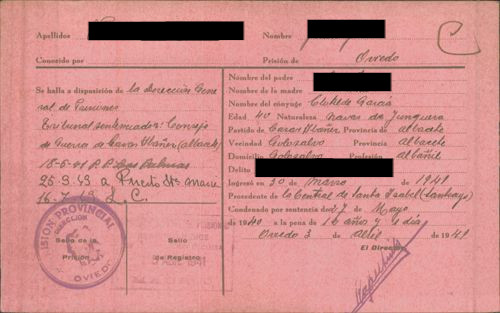

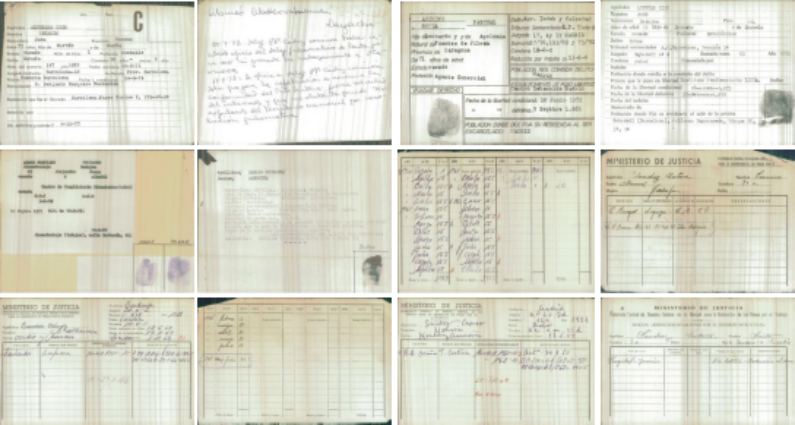

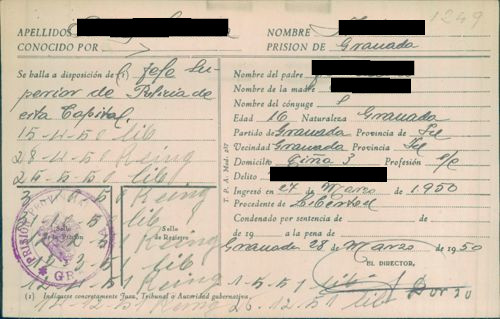

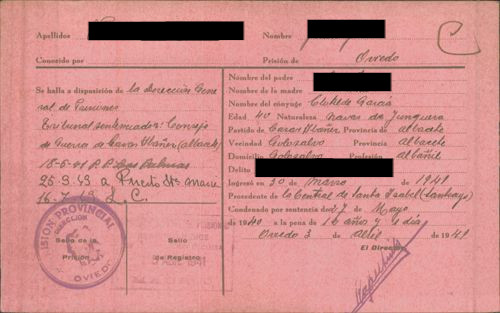

The documents originate from Spain’s post-civil war period, during which vast records on political prisoners were generated. These records include personal data, imprisonment details, and sentencing information. Given the variability in handwriting styles, document conditions, and archival quality, careful selection of training data for machine learning models was crucial. The project assesses the typographic, paleographic, and structural challenges inherent in these documents to optimize the indexing process.

Applying PrIx to large-scale historical archives, particularly those affected by poor legibility and diverse handwriting styles, facilitates historical research, improves access to archival materials, and supports ongoing efforts in digital humanities and transitional justice studies.

Moreover, due to legal requirements under Spanish law, certain data within these records has been automatically anonymized by removing extracted data from specific fields, as well as redacting those sections from the corresponding images.

This work underscores the importance of advanced indexing techniques in preserving and democratizing access to historical records, allowing researchers and the public to explore Spain’s complex past more effectively.

Sample Pages

Challenges

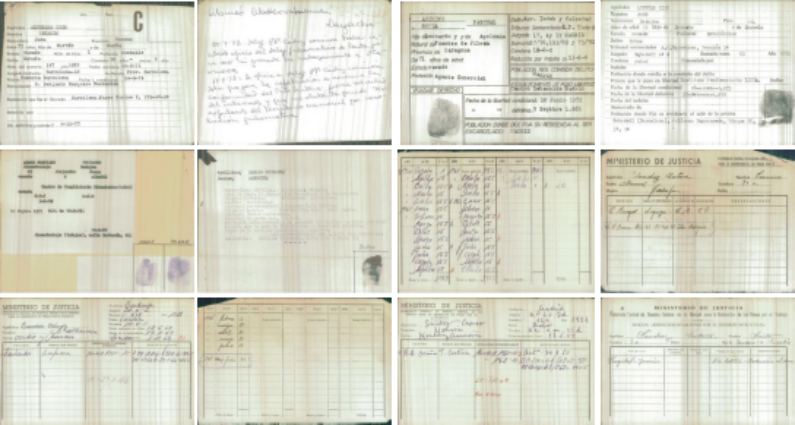

The automatic probabilistic indexing (PrIx) of the Prison Population Register-Index (1938-1955) presents several challenges due to the variability and complexity of the historical documents involved. These challenges arise from inconsistencies in document structure, handwriting diversity, and the need for automated data processing to improve searchability and comply with legal requirements.

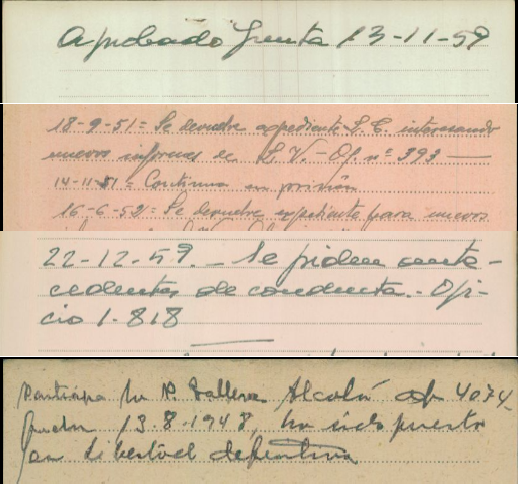

One of the primary difficulties is that, although the documents were structured as forms, the writers did not always adhere to the designated fields. Information was often written outside the predefined areas, making it harder to automatically extract and categorize data. This lack of strict formatting complicates the indexing process, requiring advanced machine learning techniques to detect and recognize handwritten text correctly.

Another challenge stems from the fact that the documents were written by multiple individuals over a long period, resulting in significant variations in handwriting styles, ink types, and writing instruments. These variations affect the accuracy of text recognition models, as they must be trained to handle a wide range of typographic and paleographic differences. The presence of faded ink, smudging, and degraded paper further exacerbates the difficulty of extracting reliable information.

The anonymization process introduces additional complexity. The fields requiring anonymization did not always appear in a fixed position within the forms; instead, they were sometimes placed freely within the document. This variability makes it challenging to locate and redact sensitive information automatically, necessitating robust text and layout recognition methods to detect these fields regardless of their position.

Moreover, the dataset consists of multiple form templates, each with distinct structures and field placements. This diversity requires adaptive models capable of identifying and processing different document formats without extensive manual intervention. Standardizing extraction across various templates ensures consistency in indexing and search functionality.

Another critical aspect is handling abbreviations. Many entries contain shortened versions of words, which must be automatically expanded for effective searchability. This requires a sophisticated text normalization system capable of recognizing common historical abbreviations and converting them to their full-length equivalents while preserving contextual meaning.

Results

588.477 pages

Successfully processed more than 500,000 digitized images of historical prisoner records. The project effectively applied machine learning techniques to extract, index, and anonymize textual information from these documents, enabling efficient search capabilities despite the challenges posed by handwriting variability, document degradation, and structural inconsistencies.

To evaluate the performance of the system, several key metrics were analyzed, categorized into global, printed text, and handwritten text recognition results:

- Character Error Rate (CER): 4.4 (global), 0.7 (printed), 9.5 (handwritten)

- Word Error Rate (WER): 9.7 (global), 1.0 (printed), 20.1 (handwritten)

- Average Precision (AP): 0.94 (global), 0.99 (printed), 0.87 (handwritten)

These results demonstrate high accuracy, particularly for printed text, while maintaining strong performance for handwritten text despite its inherent complexity. The probabilistic indexing approach allowed for effective information retrieval even in cases where handwriting was highly variable or difficult to decipher.

All processed data and results have been made available on the client’s platform, ensuring accessibility for researchers and institutions interested in exploring Spain’s historical prison records. This project represents a significant step forward in preserving and democratizing access to historical archives through advanced indexing and search technologies.